Diving into MPEG-DASH with ffmpeg and NGINX

By Phillip Adler

What is This DASH?

MPEG-DASH is a low-latency protocol for media transport to the browser over HTTP that is a competitor to HLS. It involves fragmenting an mp4 files into smaller pieces called m4s files, creating manifest data which includes metainformation about the components of the whole media (usually an audio track and a visual track for watching a movie), and allows for functionality such as video scrolling with partial loading and adaptive bitrate, which is something that sending over mp4 files as an entire video file doesn’t.

What does this article cover and not cover in terms of Media Distribution?

The goal of this article is to demonstrating the necessary steps to convert a whole mp4 file to be streamable over MPEG-DASH end clients using the output of an ffmpeg command, and then analyze the output and how are commands relate to the output, and how the output relates to the functionality of a client which is ingesting MEPG-DASH data. Its solely a Server → Peer application, where either our server application generated the video or a client uploaded an entire video.

This article does not cover topics related to live-streaming media data (I may write about this in the future), which involves ingesting data from streamers using protocols such as RTSP, RTMP, WHIP, SRT, and then either distributes the content via WebRTC or a widely browser supported HTTP-based method such as HLS or DASH (in most cases). This is for making a streaming an offline video on the server and making it publicly streamable.

It also does not cover Peer to Peer content distribution or Peer - Server - Peer in low-latency low-quality applications either, such as for video conferencing and some web-based games.

What is FFMEPG?

The FFMPEG Project

https://ffmpeg.org/ffmpeg.html

FFMPEG is an open source media which has a bunch of multimedia tools for dealing with common container formats and codecs, and can do things like create RTP streams, read from RTP streams, transcode videos, create MPEG-DASH and fragmented mp4 and manifests files, change frame rates, transcode with certain bit rates, etc.

For this article we will be using mp4 video samples:

https://test-videos.co.uk/bigbuckbunny/mp4-h264

Briefly, what is an mp4 File?

MP4 File is a container format for multimedia that can contains metadata about it contents, as well its contents, which are referred to as streams. If you want to look more into mp4 file contents and information about tracks, lookup ES (Elementary Streams), and PES (Packetized Elementary Streams), as well as details into container formats such as MEPG-4 PART 14. But long story short, an mp4 file contains a list of audio, video, subtitle, streams, which users will see upon loading a video and related metainformation. The point of this digression is that mp4 files do not directly involve the contents or compression algorithm of a video or an audio track, which is what the codec/pixel representation for that track specifies.

An Example of Contents in a General Purpose Media File:

https://developer.mozilla.org/en-US/docs/Learn/HTML/Multimedia_and_embedding/Video_and_audio_content

But Briefly, What you need to know is

MP4 Container

→ []Audio Streams

Stream #1 = {Codec, Data, Metadata}

Stream #2 = {Codec, Data, Metadata}

Codecs are actual compression and representations of the data,

Getting Started With FFMPEG

Lets List What Is Contained Inside our MEPG file

ffmpeg big_buck_bunny.mp4

Input #0, mov,mp4,m4a,3gp,3g2,mj2, from 'big_buck_bunny.mp4':

Metadata:

major_brand : mp42

minor_version : 1

compatible_brands: mp42avc1

creation_time : 2010-02-09T01:55:39.000000Z

Duration: 00:01:00.10, start: 0.000000, bitrate: 733 kb/s

Stream #0:0(eng): Audio: aac (LC) (mp4a / 0x6134706D), 22050 Hz, stereo, fltp, 65 kb/s (default)

Metadata:

creation_time : 2010-02-09T01:55:39.000000Z

handler_name : Apple Sound Media Handler

Stream #0:1(eng): Video: h264 (Constrained Baseline) (avc1 / 0x31637661), yuv420p(tv, smpte170m/smpte170m/bt709), 640x360, 612 kb/s, 23.96 fps, 24 tbr, 600 tbn, 1200 tbc (default)

Metadata:

creation_time : 2010-02-09T01:55:39.000000Z

handler_name : Apple Video Media Handler

Stream #0:2(eng): Data: none (rtp / 0x20707472), 45 kb/s

Metadata:

creation_time : 2010-02-09T01:55:39.000000Z

handler_name : hint media handler

Stream #0:3(eng): Data: none (rtp / 0x20707472), 5 kb/s

Metadata:

creation_time : 2010-02-09T01:55:39.000000Z

handler_name : hint media handlerWe can see we have a single Video and single Audio track and 2 data tracks. The data tracks will not be used in the output of our mp4 file. The data tracks will not be read as an input to our final MPEG-DASH output files.

We can also see the codecs for the Audio (aac) and Video (h264), the pixel format (yuv420p), and other information.

For More About Pixel Formats , If you find this confusing on why its important :

YUV refers to the Color Space Representation, and 420 Corresponds to the subsampling for a group of 4x2 pixels.

What does Mpeg-Dash Consist Of

Mpeg Dash outputs will be a manifest file, which shows a selection of streams for a client to choose from and is in XML format, init files, which will contain meta information about each selection stream, and a DASH javascript file which is contains a script that can dynamically choose files based on Network bandwidth (in most instances)

Setting up Nginx and Our HTML File

For This Example, I simply served Static Content With Nginx, There’s various ways you can do this, but using a config file do something like :

server {

location / {

root /path/to/your/video/folder;

index index.html;

}

}Our Index.html File (Just Shows A Video On The Screen) → Put This In The Same Folder

<video id="videoPlayer" autoplay="autoplay" controls="controls" width="960" height="540">

</video>

<!-- dash-player - no browser is natively playing DASH -->

<script src="http://cdn.dashjs.org/v3.1.0/dash.all.min.js"></script>

<script>

/* initialize the dash.js player in the #videoPlayer element */

(function () {

let url = "dash.mpd";

player = dashjs.MediaPlayer().create();

player.initialize(document.querySelector("#videoPlayer"), url, false);

})();

</script>Here we are just using dash from a CDN, You can download it and server it as static content from your file systems as well, doing something like src=”/js/dash.js” and downloading the js from the CDN to here.

This assumes that the dash manifest and all the files are in the same folder, to change this, modify url variable.

Using FFMPEG

In our examples, we will use different bitrates, frame sizes, compression buffer sizes, and max bit rates for the output video track, but will keep the same audio rack.

First Example FFMPEG Script → Fragment MP4 File Into Separate Audio and Video Adaptation Sets

Remove the comments after the backslashes “ \ “ to get the multi-line command to work.

IN_VIDEO=$1

OUTPUT_FOLDER=$2 #Make Sure Both Are Specified

SEG_DURATION=4 #Size of Segments in Seconds, if FPS = 25, It Means 100 Frames Per Segment

FPS=25 #Frames Per Second

GOP_SIZE=100

# Long Story Short, Video Encoding is Compromises of I,B, and P Frames

# I Frames are anchor frames which means they are represented by the pixel on a screen

# That will be encoded with something like Frequency Compression and Huffman Compression

# (A Lossless + Loss). But they can be independently contrives

# B and P Frames, However, Are Referenced to other frames, in hopes of reducing the

# Frequency Information in Frames,

PRESET_P=veryslow

## Make Sure That Seg_Duration X FPS = GOP_SIZE

## A GOP size of 100 means that a video decoder can go to the beginning of a fragment

## And Know THat decoding the frame is independent of previous fragments

##

V_SIZE_1=416x234

V_SIZE_2=640X360

#The Two Frame Sizes We Will Be Re-Encoding With

## REMOVE THE COMMENTS IN THE BELOW COMMAND TO GET IT TO WORK

# Now, The Actual FFMPEG Command With Examplanations

ffmpeg -i $VIDEO_IN -y \

# -i = INPUT FILE, -y = OverWrite Output Files

-preset $PRESET_P -keyint_min $GOP_SIZE -g $GOP_SIZE -sc_threshold 0 -r $FPS -c:v libx264 -pix_fmt yuv420p -c:a aac -b:a 128k -ac 1 -ar 44100 \

# -preset = Encoding Size

# -keyint_min, I believe, ensures 1 keyframe per a Fragment

# -g => Group Of Frames, Size

# -r => Frames Per Second

# -c:v => Codec For Videos

# -pix_fmt => Pixel Format, Which has how the decompressed, Reproduced Video Images Are Formatted By Colors

# -c:a => Codec For Videos

# -ac => # of Audio Channels -ar => Audio Sampling Rate, Here its 44.1 kHZ

-map 0:v:0 -s:0 $V_SIZE_2 -b:v:0 800k -maxrate:0 1.10M -bufsize:0 1.75M \

-map 0:v:0 -s:1 $V_SIZE_1 -b:v:1 145k -maxrate:1 155k -bufsize:1 220k \

# Map ->

# v => 0:v:0 -> Select From [Index] (0) -> Video Stream -> [Index] (0)

# s => (s:n) size of Nth Output Size in Frame Size Dimensions

# -b:v:n => Bitrate of Nth Output

# -maxrate:n => Max Bitrate of Nth Output

# -bufsize:n => Computation Bufsize of Nth Output

-map 0:a:0 \

#Select all Audio Streams From Input -> Leave As Is

-init_seg_name init\$RepresentationID\$.m4s -media_seg_name dash\$RepresentationID\$-\$Number%03d\$.m4s \

-use_template 1 -use_timeline 1 \

-seg_duration 4 -adaptation_sets "id=0,streams=v id=1,streams=a" \

-f dash $Folder_OUT/dash.mpd

# init-seg-name We Will Show Later What init Segments are fore

# media_seg-Name - Name of all the chunks that will make up the output

#$Representation_ID -> DASH Parameter, We will talk about RepresentationIDs in the

#final output manifest filesDash1.sh

#!/bin/bash

VIDEO_IN=$1

Folder_OUT=$2

SEG_DURATION=4

FPS=25

GOP_SIZE=100

PRESET_P=veryslow

V_SIZE_1=416x234

V_SIZE_2=640X360

ffmpeg -i $VIDEO_IN -y \

-preset $PRESET_P -keyint_min $GOP_SIZE -g $GOP_SIZE -sc_threshold 0 -r $FPS -c:v libx264 -pix_fmt yuv420p -c:a aac -b:a 128k -ac 1 -ar 44100 \

-map 0:v:0 -s:0 $V_SIZE_2 -b:v:0 800k -maxrate:0 1.10M -bufsize:0 1.75M \

-map 0:v:0 -s:1 $V_SIZE_1 -b:v:1 145k -maxrate:1 155k -bufsize:1 220k \

-map v -s:2 $V_SIZE_2 -b:v:2 500k -maxrate:2 1M -bufsize:2 1.5M \

\

-map 0:a:0 \

-init_seg_name init\$RepresentationID\$.m4s -media_seg_name dash\$RepresentationID\$-\$Number%03d\$.m4s \

-use_template 1 -use_timeline 1 \

-seg_duration 4 -adaptation_sets "id=0,streams=v id=1,streams=a" \

-f dash $Folder_OUT/dash.mpd

VIDEO_IN=$1

Folder_OUT=$2

FPS=10

GOP_SIZE=40

PRESET_P=veryslow

V_SIZE_1=416x234

ffmpeg -i $VIDEO_IN -y \

-preset $PRESET_P -keyint_min $GOP_SIZE -g $GOP_SIZE -sc_threshold 0 -r $FPS -c:v libx264 -pix_fmt yuv420p -c:a aac -b:a 128k -ac 1 -ar 44100 \

-map v -s:1 $V_SIZE_1 -b:v:1 145k -maxrate:1 155k -bufsize:1 220k \

\

-map 0:a:0 \

-init_seg_name init\$RepresentationID\$.m4s -media_seg_name dash\$RepresentationID\$-\$Number%03d\$.m4s \

-use_template 1 -use_timeline 1 \

-seg_duration 4 -adaptation_sets "id=0,streams=v id=1,streams=a" \

-f dash $Folder_OUT/dash.mpdDash3.sh, 3rd Dash File

Now load up the webpage served from Nginx on your location Machine after running one of these scripts in the specified scripts (if you use index.html it will be this same path in your location block) . It should look like a plain video file in the browser

Looking Into The Contents of The Outputs

Reconstructing MP4 Files, What are m4s files?

The output format, or m4s files, are not actually mp4 files, but rather fragments of a certain mime type (audio/mp4, video/mp4).

The only place where meta information about the tracks and codecs and frame size/sampling rate, etc. is actually in the init files (init0 for Representation 0 and init1 for Representation 1, etc.)

If you run ffprobe on init1.m4s → You’ll get the codec format

If you run ffprobe on dash0-005.m4s → You’ll get an error, where it says invalid format.

But, if you combine init1.m4s with dash1-001.m4s … dash2-002.m4s, ffprobe works again.

Lets Try This

cat init1.m4s >> file

cat chunk1-001.m4s >> file

ffprobe now works and you can play this back in a mp4 file

You can now see why the init files are necessary, because they encode metainformation about the rest of that “stream” or track

This is something called isobmff, which is intended for remote distribution, such as over the web.

https://www.w3.org/TR/mse-byte-stream-format-isobmff/

cat init3.m4s >> playback.m4s

cat chunk3-001.m4s >> playback.m4s

ffprobe playback.m4s

Input #0, mov,mp4,m4a,3gp,3g2,mj2, from 'playback.m4s':

Metadata:

major_brand : iso5

minor_version : 512

compatible_brands: iso6mp41

encoder : Lavf58.29.100

Duration: 00:00:03.95, start: -0.023220, bitrate: 133 kb/s

Stream #0:0(und): Audio: aac (LC) (mp4a / 0x6134706D), 44100 Hz, mono, fltp, 130 kb/s (default)

Metadata:

handler_name : SoundHandlerIf you run this in an mp4 player, you get a sound playback (part of the 2nd Dash Shell Script)

You can also “convert it” back to mp4 using

ffmpeg -i playback.m4s -c copy playback.mp4

Input #0, mov,mp4,m4a,3gp,3g2,mj2, from 'playback.m4s':

Metadata:

major_brand : iso5

minor_version : 512

compatible_brands: iso6mp41

encoder : Lavf58.29.100

Duration: 00:00:03.95, start: -0.023220, bitrate: 133 kb/s

Stream #0:0(und): Audio: aac (LC) (mp4a / 0x6134706D), 44100 Hz, mono, fltp, 130 kb/s (default)

Metadata:

handler_name : SoundHandler

Output #0, mp4, to 'playback.mp4':

Metadata:

major_brand : iso5

minor_version : 512

compatible_brands: iso6mp41

encoder : Lavf58.29.100

Stream #0:0(und): Audio: aac (LC) (mp4a / 0x6134706D), 44100 Hz, mono, fltp, 130 kb/s (default)

Metadata:

handler_name : SoundHandler

Looking at the MPD file

Run ./dash2.sh ./big_buck_bunny.mp4 .

<?xml version="1.0" encoding="utf-8"?>

<MPD xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns="urn:mpeg:dash:schema:mpd:2011"

xmlns:xlink="http://www.w3.org/1999/xlink"

xsi:schemaLocation="urn:mpeg:DASH:schema:MPD:2011 http://standards.iso.org/ittf/PubliclyAvailableStandards/MPEG-DASH_schema_files/DASH-MPD.xsd"

profiles="urn:mpeg:dash:profile:isoff-live:2011"

type="static"

mediaPresentationDuration="PT1M0.0S"

minBufferTime="PT8.0S">

<ProgramInformation>

</ProgramInformation>

<Period id="0" start="PT0.0S">

<AdaptationSet id="0" contentType="video" segmentAlignment="true" bitstreamSwitching="true" lang="eng">

<Representation id="0" mimeType="video/mp4" codecs="avc1.64001f" bandwidth="1000000" width="640" height="360" frameRate="25/1">

<SegmentTemplate timescale="12800" initialization="init$RepresentationID$.m4s" media="chunk$RepresentationID$-$Number%03d$.m4s" startNumber="1">

<SegmentTimeline>

<S t="0" d="51200" r="14" />

<S d="1024" />

</SegmentTimeline>

</SegmentTemplate>

</Representation>

<Representation id="1" mimeType="video/mp4" codecs="avc1.640016" bandwidth="145000" width="416" height="234" frameRate="25/1">

<SegmentTemplate timescale="12800" initialization="init$RepresentationID$.m4s" media="chunk$RepresentationID$-$Number%03d$.m4s" startNumber="1">

<SegmentTimeline>

<S t="0" d="51200" r="14" />

<S d="1024" />

</SegmentTimeline>

</SegmentTemplate>

</Representation>

<Representation id="2" mimeType="video/mp4" codecs="avc1.64001f" bandwidth="500000" width="640" height="360" frameRate="25/1">

<SegmentTemplate timescale="12800" initialization="init$RepresentationID$.m4s" media="chunk$RepresentationID$-$Number%03d$.m4s" startNumber="1">

<SegmentTimeline>

<S t="0" d="51200" r="14" />

<S d="1024" />

</SegmentTimeline>

</SegmentTemplate>

</Representation>

</AdaptationSet>

<AdaptationSet id="1" contentType="audio" segmentAlignment="true" bitstreamSwitching="true" lang="eng">

<Representation id="3" mimeType="audio/mp4" codecs="mp4a.40.2" bandwidth="128000" audioSamplingRate="44100">

<AudioChannelConfiguration schemeIdUri="urn:mpeg:dash:23003:3:audio_channel_configuration:2011" value="1" />

<SegmentTemplate timescale="44100" initialization="init$RepresentationID$.m4s" media="chunk$RepresentationID$-$Number%03d$.m4s" startNumber="1">

<SegmentTimeline>

<S t="0" d="173056" />

<S d="177152" />

<S d="176128" r="2" />

<S d="177152" />

<S d="176128" r="2" />

<S d="177152" />

<S d="176128" r="1" />

<S d="177152" />

<S d="176128" r="1" />

<S d="9216" />

</SegmentTimeline>

</SegmentTemplate>

</Representation>

</AdaptationSet>

</Period>

</MPD>

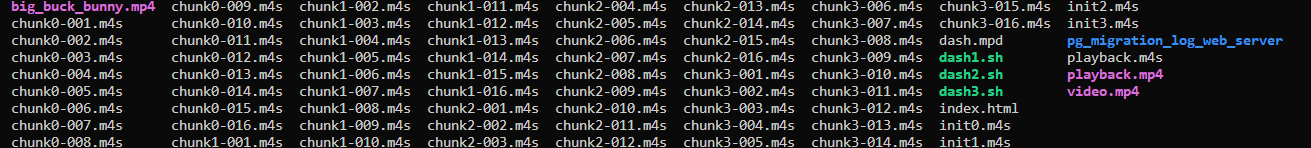

Output Folder

We Can see a Hierarchy that looks Like

Manifest

Adaptation Sets[]

Adaptation Set : Representation ID[]

Representation ID: {Meta-Information About Track, Timeline of Track}

Adaptation Set : Representation ID[]

…..

…..

Representation IDs

Each output in the dash ffmpeg scripts correspond to a single “output”, which is analogous to a stream inside of an mp4 files, as we saw from inspecting the initial mp4 files from ffprobe. In the output of Dash, each one of these corresponds to a Representation ID.

With each map operation, we associated the codec, bitrate, buffersize, max buffer rate, and resolution with each video output.

There is only one Representation ID.

Adaptation Sets

1 or More Representation ID belongs to an “Adaptation Set”, and in our ffmpeg script, we corresponded output streams to a particular Adaptation set with

-adaptation_sets "id=0,streams=v id=1,streams=a"Which selects all video streams to id=0 and all audio streams to id=1

Adaptation Sets are a single “choice” or “selection” for a particular stream or output, with varying qualities such as bitrate and resolution, and the Dash client can Dynamically (Dynamic part of DASH) select future video segments to load based on hardware or software predicaments

So ultimately, for each fragment, the Dash Client will load from the network one stream in the Adaptation set.

Segment Timeline

For each stream, you an see a segment timeline that maps out the corresponding time intervals for each of the dash chunks in that stream.

You see for the video, there is simply r=14, which means repeated 14x more for the 60 second video (4 * (1+14)), checks out.

For the audio, you’ll see sizes that are about 4.5x the sampling frequency, I am fairly certain this is to prevent aliasing, and audio sizes are much lower than video, so its less intrusive for the user to have to load more.

If you only have the “start” of a audio segment, you can end up with aliased high-pitched frequencies, but by “windowing” the output, by snipping out frequency information you can end up with high-pitched noises.

This is why you saw a Negative Start value in the earlier mp4 file we constructed from the mps audio. At least, why I think so.

The Client to reconstruct the initial video will know to choose the best fit track from each adaptation step.